Taste in Translation

Decoding sensory information for augmented dining experiences

Our human senses play an integral role in our emotional processing. While sight, sound, touch (and sometimes smell) can be conveyed through visual forms that people can understand, taste among all is a sense that’s hard to visualize due to its subjectiveness and abstractness.

The lack of sensory information about food’s taste (especially in restaurants) can make it difficult for people to understand unfamiliar dishes. Information on restaurant menus are usually limited to a list of names, ingredients and prices. These tell little about what dishes taste like and this creates cognitive difficulty for people to imagine what the foods would taste like.

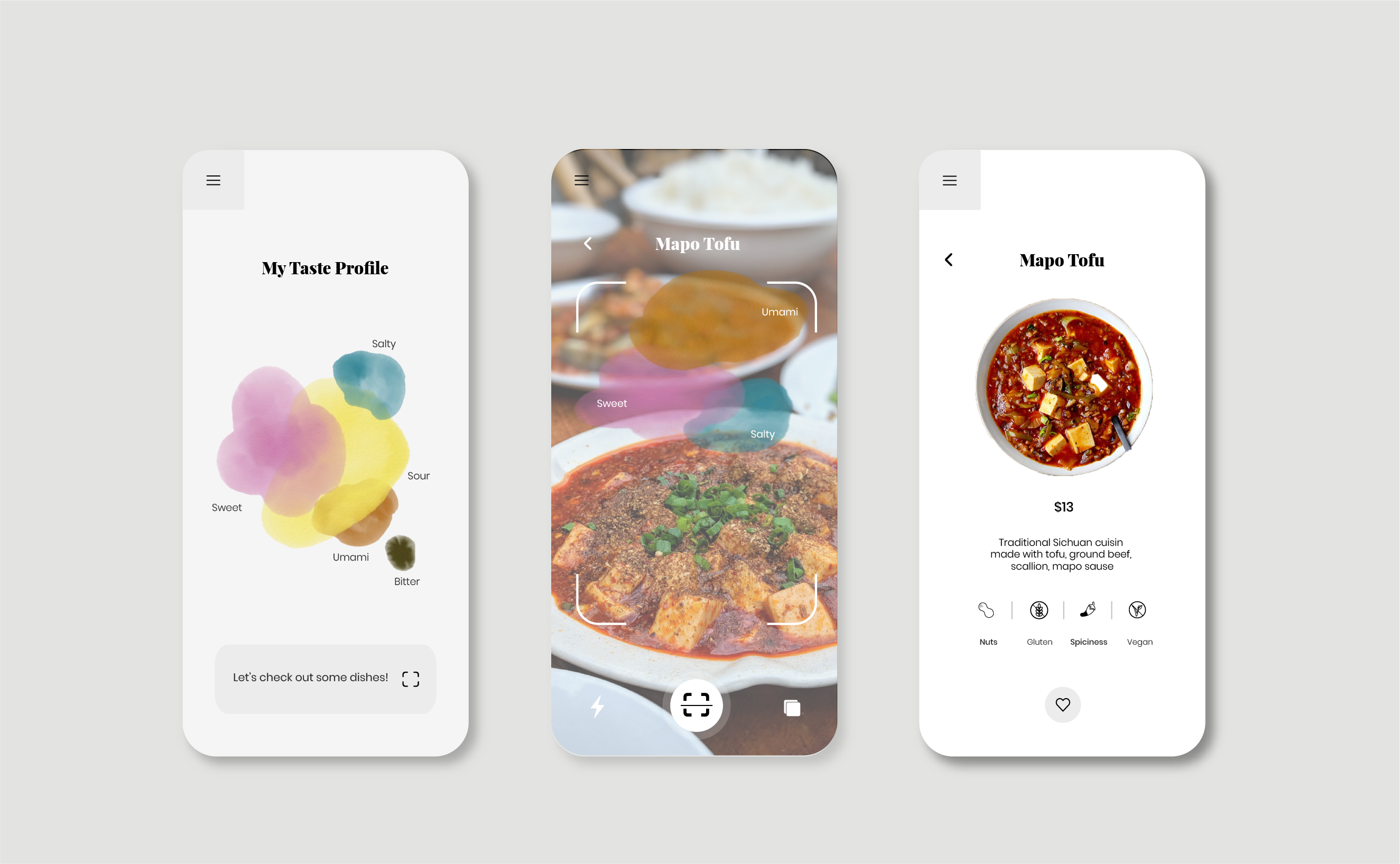

This project aims to explore ways of communicating sensory information through a human-centered perspective using augmented reality. By looking at existing examples of taste visualizations and conducting original research experiments, I created a visual system that represents the five basic tastes, which will be projected through a mobile AR app where users scan a menu or dish for insights.

Design Process

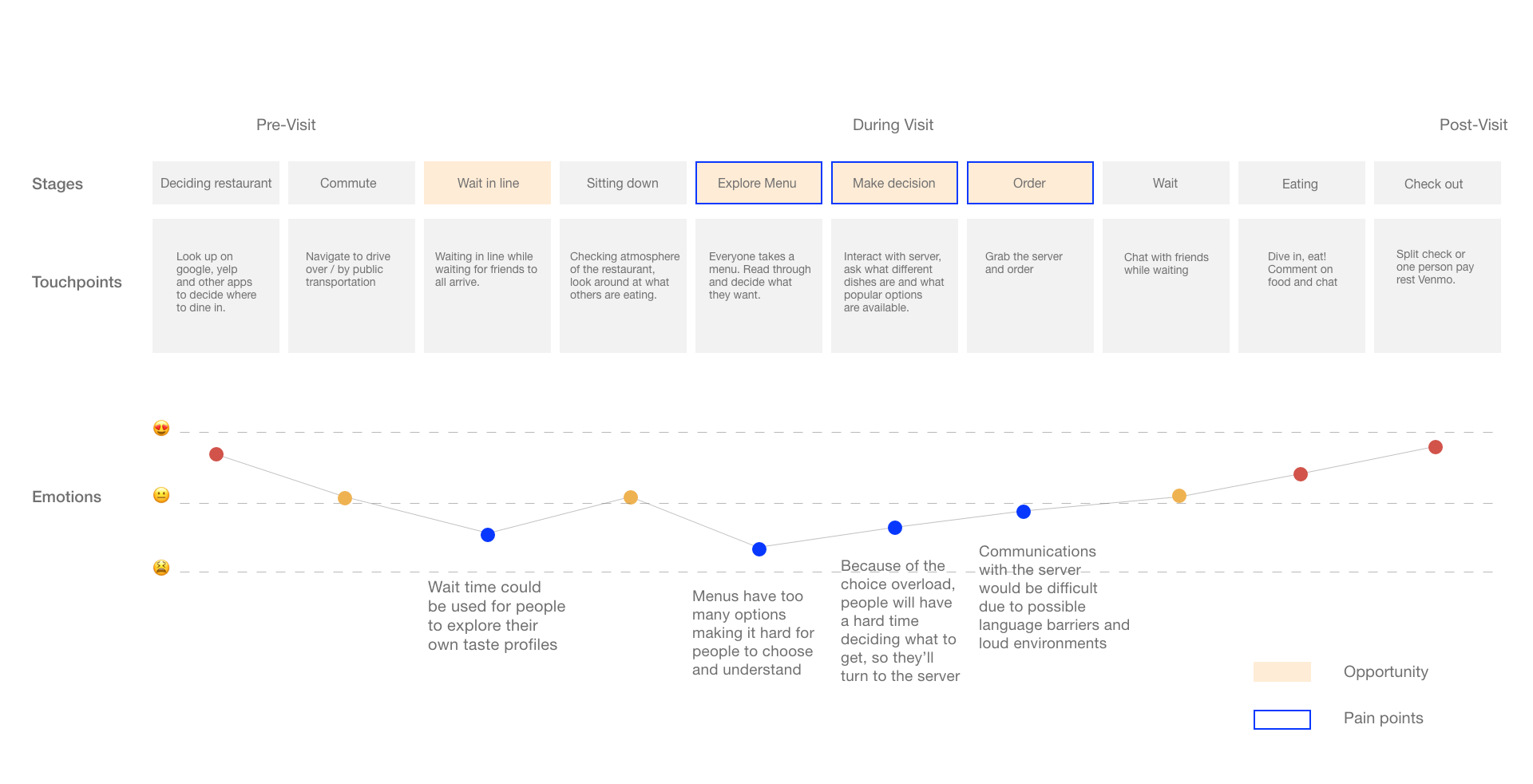

Diner's Journey

Storyboard

Barriers to eating out and proposed experience

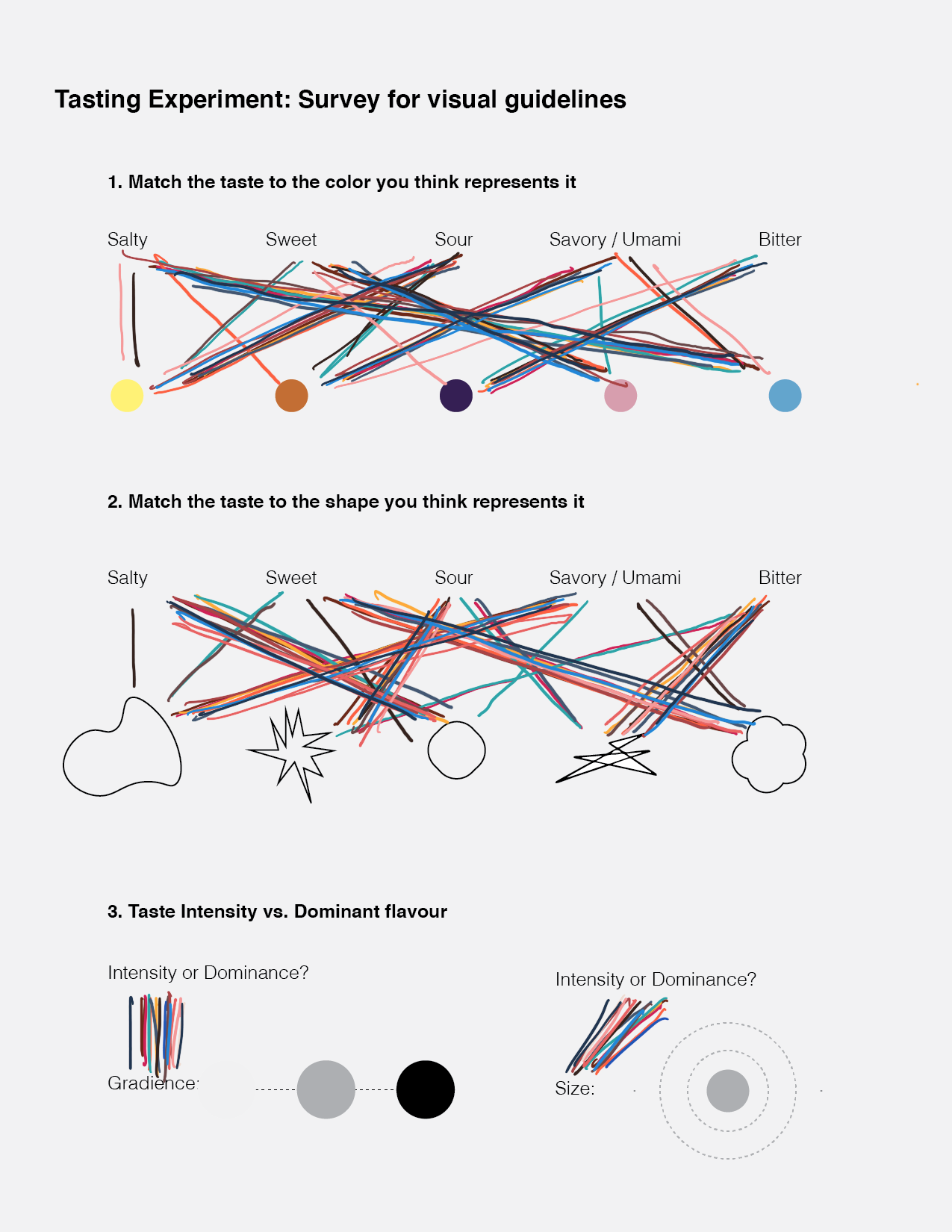

Taste Sculptures

A visual exploration of ways to communicate taste

How do we SEE taste? Very few of us are used to the idea of visualizing the form of taste. People with synesthesia can see senses other than sight like sound and taste due to their neurological condition, but how can we bring this synesthia-like experience to help with communicating this sensory information?

I conducted a tasting experiment where participants tasted different dishes of different dominant taste. The image on the right shows part of the collection of the sculptures made by people to express what different dishes made them feel.

This collection serves as a visualization database in which I found commonalities to synthesize for my visual system. Key findings show that round and smooth outlines usually shows the sense of sweetness, and sometimes umami. Sharper edges are directly associated with salty, sour, and bitter tastes.

Despite the different forms individuals made, there are common elements that define a comprehensive idea of taste visualization.

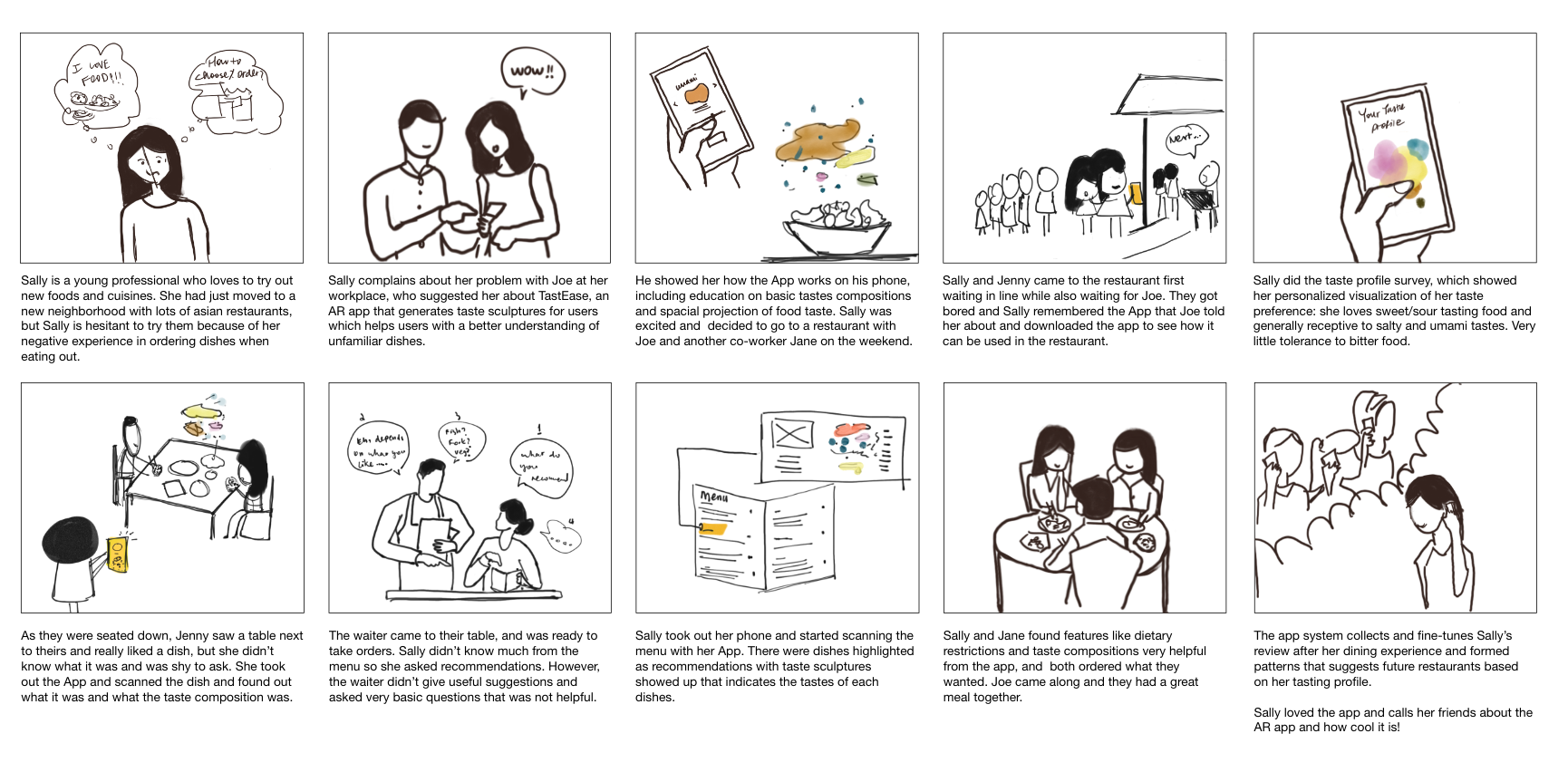

Taste Visualization Visual Survey

Does the taste-color and taste-shape make sense?

After gathering information from relevant literature and synthesizing the results from the taste sculptures, I created a survey that asked a dozen people to associate the five basic tastes with colors and shapes. I also added a question that asks people to associate intensity/domninance with gradience/size of forms.

The purpose of the survey is to make sure the visual system will make sense to people, and that it feels intuitive for most people to understand. Most people made associations similiar to the visual system, even though there are some interesting exceptions which people explained having to do with their cultural background such as the food they grew up eating.

This testing result helped me determine a preliminary visual system, which will be used as the basis of the forms in the augmented reality app representing tastes.

Taste in watercolor overlay

After deciding the colors, shapes and ways of representing intensity and dominance, I started exploring ways of projecting the forms on a mobile screen. I used watercolor as a means to represent the five taste, because it’s both aesthetic and intuitive to understand. The undefined border and fluid nature of watercolor resembles the idea of taste, independant yet merged with one another.

Why Augmented Reality

Augmented reality (AR) is a technology that adds layers of digital information onto our physical world. Unlike Virtual Reality (VR), AR is not as intrusive and does not create a whole artificial environment to replace real life with a virtual one.

In this project, I am using AR to overlay sensory information in the form of floating watercolor forms to provide a sense of what a dish tastes like.

The image on the right shows a concept of AR that is used to scan mapo tofu for information on the ingredients, dietary information and taste profile. Users will have a direct knowledge of what the food tastes like.

Key Screens

Next Step

Visualizing taste through artistic sculptures is only one way of communicating this sensory information. As the thesis project is still in progress, the next step is to create the AR prototype that shows the concepts and use cases that can really help people with recognizing food in unfamiliar restuarants. The goal of this thesis is to give people more certainty and confidence when they dine in a foreign restuarant, and to enhance their dining experiences by providing this information-augmenting application.